I feel pretty strongly that any organization that aspires to data-driven decision making should be exporting GA4 data to BigQuery. And the time to start is now, since there’s no way to backfill data—you can only start exporting today’s data today.

I’ll start by describing what the export is and isn’t, but feel free to skip ahead to the five reasons.

First, what the GA4 BigQuery export is, and what it isn’t

When I looked at the export data for the first time, I was surprised by what I saw. I was expecting to see what I assumed to be under the hood in GA4: aggregated tables structured for reporting on user, session, event and ecommerce metrics. It’s not that at all. It is the raw event data that can be used to build those things. Each record is an event sent by a GA4 tag that fired in a visitors browser – the data is completely un-aggregated.

That makes it much harder to work with. You can’t, for example, hook up Looker Studio directly to the event data and get much of anything. You will have to transform it into the dimensions and metrics you care about. To do that, you will need:

- Proficiency in ‘Standard SQL’, the dialect of SQL used by BigQuery

- A solid understanding of the complete definitions of GA4 dimensions and metrics

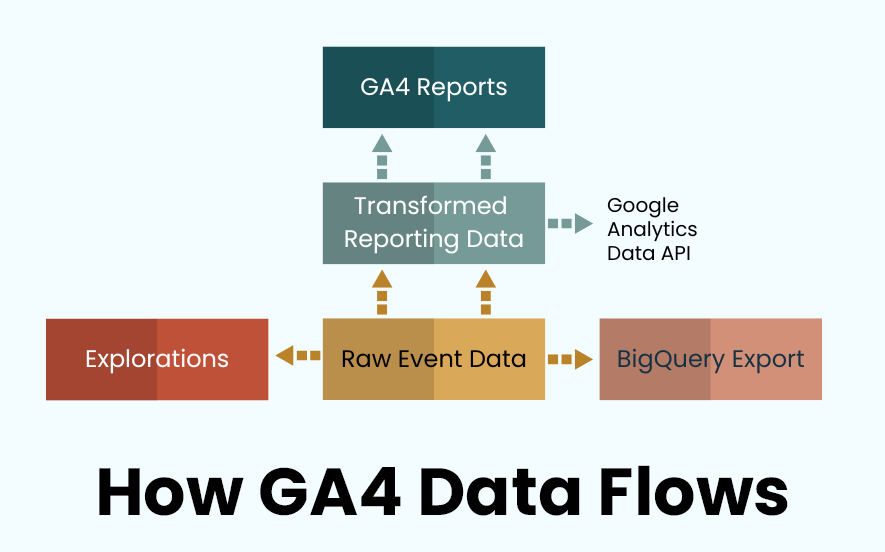

A point of confusion is the fact that GA also has a reporting API that gives you access to data that has already been transformed. This is the API that Looker Studio and other 3rd-party apps use to integrate with GA4. Below is a high-level schematic of how GA4 data flows.

So, the data in the BigQuery export is un-aggregated and pretty complicated to work with. With that said, let’s get into why it’s definitely worth it to set up the export.

Five Reasons to Set Up the GA4 BigQuery Export

Reason #1: Unfettered analysis

If you’ve spent much time in GA4, you know that the reporting interface is, shall we say, lacking. It can be really tedious to get at the data you want, and has basic limitations like the fact that you can report on a maximum of two dimensions at a time and charts are not customizable. A lot of this is overcome-able with Looker Studio, but the BigQuery export opens up a whole new world of opportunities. Here are a few mini case studies that demonstrate what’s possible:

- For a B2B client focused on lead gen, we built a reverse-path-analysis report that shows traffic sources and detailed website paths for users that ultimately fill out a lead-gen form.

- For a travel publisher that generates revenue from affiliate links, we recreated the Universal Analytics Page value metric, helping them to understand which content is most likely to contribute to revenue.

- For a consumer finance publisher, we built a solution that ties GA4 content groups to Google Search Console data to quickly identify and prioritize content topics that are trending up or down in organic search.

- For a logistics company we joined Salesforce and GA4 data to show how different categories of businesses are interacting with a customer portal.

- For several clients we are feeding user-path data into AI models to do qualitative analysis of website performance.

These are just a few examples based on specific queries and data models I have constructed – the key takeaway is: if you can formulate a question that should be answerable with the data, it probably is answerable if you free yourself from the constraints of GA4 reporting.

Reason #2: Increased accuracy

With GA4 data in BigQuery, you get the same results every time you run a report, and you know exactly how numbers are calculated. This is very much in contrast with regular GA4 reporting, which is subject to any or all of the following:

- Sampling can happen when a report is based on a large number of events.

- Thresholding is applied when GA4 thinks a dimension or combination of dimensions might reveal the “identity or sensitive information of individual users based on demographics, interests, or other signals present in the data”.

- Cardinality limits the number of rows you can report on for dimensions with a lot of values, like product sales or page paths.

- Metrics such as Sessions and Users are based on estimates versus actual counts.

Some issues are baked into GA4 reporting, but human error can also affect the accuracy of data. I recently dealt with a situation where a client had mis-configured a trigger in Google Tag Manager, resulting in a key event being significantly overcounted. In BigQuery, I was able to clean out all but the legitimate events.

Reason #3: Bot traffic removal

One of my biggest gripes with GA4 is the fact that you have limited options for filtering out bot traffic, and once a bot has polluted your GA4 data, there is no way to remove it. I have spent too much time hunting for and dealing with bots, and I can say that most GA4 properties I encounter have at least some bot traffic skewing metrics.

With BigQuery, you have direct access to all of the underlying data, so once you have identified bot traffic, it is easy to remove.

If you are familiar with the view filtering capabilities of Universal Analytics, you have all the same power in BigQuery, and you can filter data retroactively!

Reason #4: Meaningful statistics from users who have opted out of cookies

I’m surprised more people aren’t talking about this. There are privacy laws in many countries and states that limit our ability to track user behavior, and it is only getting harder as regulations get stricter and more prevalent. But this pertains to data that can be tied to a user identifier. With Google Consent Mode in place, you still collect data for opted-out users, but user and session IDs are removed. This means you can’t do things like tie an ad click to a landing page to a conversion, but you can still report on page views, conversion counts, and a variety of other metrics that don’t require associating multiple events with the same user.

Theoretically, GA4 reporting has the ability to model behavior for opted out users, but in my experience few properties meet the prerequisites to do so. The only prerequisite for getting the data in BigQuery is that you have to have implemented Consent Mode.

The reality is that we are all going to have to get comfortable with the fact that we can’t and shouldn’t track people over time without their explicit consent. This is a powerful tool in the face of that reality.

Reason #5: Faster, better reporting

Let’s face it, GA4 reporting is a train wreck. When Google Analytics first hit the scene in 2007, the reporting was fast and comparatively intuitive (anyone remember Crystal Reports?). They had made incremental improvements over the years, but took a big step back with GA4. Meanwhile, the rest of the world got a lot more savvy about data exploration and visualization.

My theory is this: somewhere in the GA product planning process there was an argument about who to please. One side argued reporting should be easy-to-use for non-analysts, and the other side argued that it should meet the needs of users who are fluent with data visualization in Tableau or jupyter notebooks. Both sides won. The GA4 UI most certainly is aimed at the lowest common denominator, because they knew they could not satisfy the latter community without alienating the former. So they made the BigQuery export free and available to everyone.

Advanced analysts can and do use the export to build reporting solutions in their tool of choice. My own usage breaks out about like this:

- 20% of the time I go to GA4 reporting to answer questions

- 30% of the time, I use Looker Studio

- 50% of the time, I use something else to explore and visualize data, including BigQuery itself, Google CoLab, Tableau and Google Sheets.

The next time you beat your head against a wall wondering why you can’t get the data you need from GA4, remember, there is a better way.

Bonus Reason: Own your data

Having just gone through the painful process of backing up Universal Analytics for a number of clients, I’ve got to add this one to the list. Google could decide tomorrow to shut down GA4, or limit access to historical data. I doubt it will happen tomorrow, but it will happen, and when it does, exporting data from the web UI will be a poor substitute for having comprehensive data in BigQuery.

What Does Exporting GA4 Data to BigQuery Cost?

The short version is: if your GA4 property tracks fewer than a million events per month, your BigQuery cost should be less than $10.

The export itself doesn’t cost anything, so the two primary costs are BigQuery usage and data engineering.

The Cost of BigQuery

The cost of BigQuery usage is pretty complicated to calculate, but it is not as much as you might think. It is based on data storage and compute time, and there is a generous free tier. The free tier includes up to ten gibibytes (almost the same as gigabytes) of storage and one tebibyte (almost the same as a terabyte) of compute time. You only pay for usage above the free-tier thresholds.

When my BigQuery project only had the twooctobers.com GA4 property’s data, it fell within the free tier and I didn’t pay anything. The volume of events you collect is a pretty good predictor of how much storage you will use, and our property generates about 60k events per month. You can see how many events your GA4 property is collecting under Reports > Engagement > Events.

The same project now has GA4 exports from seven different GA4 properties, which combined generate about 1.5 million events per month. All in, BigQuery fees were $3.21 last month.

If you are dealing with sites that get a lot more traffic and therefore generate more events, storage costs will scale proportionately, but the bigger variable is compute time.

I keep compute costs low by pre-aggregating data versus querying the raw events tables, and being judicious about processes that run automatically every day. I just started working with an organization that has been less judicious, and their compute costs are about $200 per month. Still pretty reasonable, but if you are sloppy with your queries, you can end up paying a lot more.

If you’d like to more accurately estimate what your costs will be, this Analytics Canvas article does a great job of breaking it down.

The Cost of Data Engineering

The other primary cost is data engineering. As I described above, the raw event data isn’t particularly useful as is – it needs to be transformed to be usable for reporting and analysis.

This cost is harder to predict, and to be honest I am an unreliable narrator. I have been working with Google Analytics for more than 20 years now (if you include Urchin), and I thought I was pretty much an expert before GA4 came along. Digging into the BigQuery data has taught me so much I didn’t realize I didn’t know.

So, yes, it takes a bit of time and effort to learn how to work with the data and engineer standard and custom reports. But there is a huge intangible benefit that comes from the effort. You will develop a much deeper understanding of what the data represents, and a level of fluency that will empower you to answer questions you didn’t know you could ask.

There are also a lot of resources available to help you get a solution in place. I mention a few of my favorites below. And of course you can rely on a partner to manage this side of things for you.

Other Downsides

A key reason not to proceed with GA4 data in BigQuery is the previous point: there is a pretty steep learning curve to working with the data, and you may not have the ability to hire an expert to help you get there. Other limitations of the GA4 export data:

- You don’t get any historical data – the data starts from the day the export starts.

- It does not include demographics and interest data associated with Google Signals

- It does not include Google Ads click and cost data. However, Google did just recently add a session_traffic_source_last_click record to the export data that includes a bunch of useful Ads data.

- Since it is raw event data, GA4 predictive and modeled metrics are challenging to reconstruct. Notably, the data-driven attribution model used in Advertising reports is nigh-on impossible to recreate. On the plus side, you can experiment with your own attribution models.

- The daily export is capped at one million events per day, unless you pay for Google Analytics 360.

If you decide that now is not the time to dive into this, here is one thing to think about: setting up the export itself is dead easy, and data storage in BigQuery is cheap. Consider setting up the export even if you don’t plan on doing anything with it in the near term. When you are ready to dig in, you will be glad you did.

Resources

This post is about why, not how, but I will share a few resources that have been helpful to me.

- ga4bigquery.com is the definitive resource, in my opinion. Some of the more advanced techniques and examples require a subscription to access (worth it), but there’s a lot to get you started for free.

- I also found this course invaluable: Query GA4 Data In Google BigQuery – created by Johan van de Werken, the author of ga4bigquery.com, in partnership with the inestimable Simo Ahava.

- Sessionization is an important concept to get your head around when working with GA4 data. The basic idea is that organizing GA4 events around user sessions significantly reduces compute costs and simplifies the process of generating metrics for reporting. This article helped me understand why it matters: GA4 Sessionization and Traffic Source handling in BigQuery.

- On the subject of sessionization, a Googler published this github repository with Google Dataform code to sessionize GA4 data: ga4_dataform.

- This tool writes queries for you. It isn’t perfect. I usually have to tweak things quite a bit to get the result I want, but it can be a time-saver for getting started.

I have found that ChatGPT and other LLMs are so-so at helping with writing queries. The structure of GA4 data is weird, and there’s a lot of misinformation out there, so I don’t think AI is quite up to speed. +1 for the humans.

If you have questions or are just stuck with where to start, please comment below or reach out. There’s an email link to contact me at the bottom of this page. And give us a shout if you’d like the benefits of having your GA4 data in BigQuery, but don’t want to deal with the in-depth technical parts. Two Octobers can help elevate your team’s marketing analytics too.